Category: Apple

Not a lot of details:

Apple has issued a new round of threat notifications to iPhone users across 98 countries, warning them of potential mercenary spyware attacks. It’s the second such alert campaign from the company this year, following a similar notification sent to users in 92 nations in April.

Recently, commuters in California, Paris, Singapore, Queensland, and London have been encountering Apple Inc.’s Safari Browser ads on billboards and public buildings. These ads cleverly promote Safari as the browser of choice for iPhone users while taking a swipe at Google’s Chrome browser. Google had recently admitted to collecting data from Chrome users, sparking concerns over privacy.

Apple’s ad campaign suggests that users worried about data security and privacy should switch to Safari for their browsing needs, emphasizing improvements like fixing the kids’ screen time bug. While some find Apple’s promotional tactics innovative, others criticize the approach for unfairly disparaging competitors.

Earlier this year, Google Chrome faced backlash for allegedly collecting data even in incognito mode, including cookies, search history, and location details. The controversy highlighted ongoing debates about tech companies’ ability to collect user data with or without consent.

Google, under its parent company Alphabet Inc., claims to prioritize user data security and control over content. However, discrepancies between policy and practice have been highlighted, notably in a 2020 lawsuit alleging data collection despite incognito mode usage.

In the past few days, Google issued security updates for its Chrome 125 browser, addressing nine vulnerabilities. This followed an alert from a bug bounty program about a potential security flaw that could allow remote hackers to inject code via an HTML page, posing a risk to browser stability.

Apple has remained committed in offering its users utmost level of privacy as it also doesn’t cater to the demands of law enforcement agencies across the world with regards to data sharing…..

The post Apple Safari Browser Data Security ad against Google Chrome appeared first on Cybersecurity Insiders.

Please see below comments by Kevin Surace, Chair, Token & “Father of the Virtual Assistant” for your consideration regarding ant coverage on Apple’s recent AI announcement:

Apple has taken a “privacy and security first” approach to handling all generative AI interactions that must be processed in the cloud. No one else comes close at this point, and no one else has spelled out with full transparency how they intend to meet that high bar. More information can be found here: https://security.apple.com/blog/private-cloud-compute/.

Note that, at least for now, this is for Apple hardware product users who must trust that what they say to the AI is private to them and can never be stolen or learned from. It’s possible that some enterprises will evaluate the strength of this and allow their employees to use Apple devices with Apple Intelligence without fear.

Apple didn’t exactly state what silicon they used here. Is it a custom GPU cluster they designed or their own M4 processors, which include a neural engine and substantial GPU resources? But in typical Apple fashion, they have vertically integrated everything and taken ownership of its security from top to bottom. It’s impressive and ahead of AWS, Microsoft, and Google cloud offerings for LLMs thus far, even if it is just in support of Apple Intelligence features.

Apple has set the bar for absolute privacy and security of generative AI interactions. Everyone else will need to scramble now to meet this bar. This may allow enterprises to trust the Apple infrastructure for routine Apple Intelligence interactions, even those that include some corporate data.

Apple has developed its own foundation models that are very impressive but don’t yet beat out GPT-4. They publish their comparisons here: https://machinelearning.apple.com/research/introducing-apple-foundation-models. While Apple has not said what its partnership with OpenAI entails, they hint that when GPT-4 (or GPT-5 perhaps) is required for more accuracy, they will use it. To ensure absolute privacy, they would need to host it themselves in their Private Cloud Compute. They didn’t state that yesterday, so I suspect that the ink is still drying on those agreements with details to be worked out. But bouncing out to GPT-4 anytime won’t work. They suggested there would be an opt-in to that, so perhaps users give up some privacy when they opt to use GPT-4. How safe is OpenAI? They do provide various levels of private operation, but no one really knows how safe, secure, and non-sharing it actually is. While Apple has published an extensive security white paper, OpenAI has a short ChatGPT Enterprise privacy note, which certainly isn’t convincing Elon Musk it’s safe.

Apple has set the bar for absolute privacy and security of generative AI interactions. This may allow enterprises to trust the Apple infrastructure for routine Apple Intelligence interactions, even those that include some corporate data. This is a world-class effort, one where they are inviting security experts to poke holes in their approach. I’d say it appears as rock solid as anything we have seen.

All data to the cloud is encrypted, so a simple man-in-the-middle attack won’t work. From what they are saying, one would have to break into their network, but they don’t even have any debugging tools enabled in runtime—no privileged runtime access. They even took major precautions against actual physical access (basically breaking into the data center). They state that they have made this so secure and so encrypted with no storage of your information that it isn’t a target. I’d say this is state-of-the-art from the silicon to the outer doors of the facility.

Apple is stating that they are using their own foundation models in the network and the devices. That’s first and foremost. Then they note a partnership with OpenAI, to be used only when required, and they will also use the best of breed models. They seem to be hedging their bets here. OpenAI is a bit of a black box. But I suspect either Apple will host it themselves or demand a very private instance for their users, and users have to opt-in to its use. They failed to give us more details on the partnership, so time will tell, but it’s clear Apple takes privacy and security seriously, and they realize the hesitancy when they mention OpenAI. My bet is they will do this right, and it won’t be an issue.

The post Expert comment: Apple AI safety & security appeared first on Cybersecurity Insiders.

Image: Shutterstock.

Apple and the satellite-based broadband service Starlink each recently took steps to address new research into the potential security and privacy implications of how their services geo-locate devices. Researchers from the University of Maryland say they relied on publicly available data from Apple to track the location of billions of devices globally — including non-Apple devices like Starlink systems — and found they could use this data to monitor the destruction of Gaza, as well as the movements and in many cases identities of Russian and Ukrainian troops.

At issue is the way that Apple collects and publicly shares information about the precise location of all Wi-Fi access points seen by its devices. Apple collects this location data to give Apple devices a crowdsourced, low-power alternative to constantly requesting global positioning system (GPS) coordinates.

Both Apple and Google operate their own Wi-Fi-based Positioning Systems (WPS) that obtain certain hardware identifiers from all wireless access points that come within range of their mobile devices. Both record the Media Access Control (MAC) address that a Wi-FI access point uses, known as a Basic Service Set Identifier or BSSID.

Periodically, Apple and Google mobile devices will forward their locations — by querying GPS and/or by using cellular towers as landmarks — along with any nearby BSSIDs. This combination of data allows Apple and Google devices to figure out where they are within a few feet or meters, and it’s what allows your mobile phone to continue displaying your planned route even when the device can’t get a fix on GPS.

With Google’s WPS, a wireless device submits a list of nearby Wi-Fi access point BSSIDs and their signal strengths — via an application programming interface (API) request to Google — whose WPS responds with the device’s computed position. Google’s WPS requires at least two BSSIDs to calculate a device’s approximate position.

Apple’s WPS also accepts a list of nearby BSSIDs, but instead of computing the device’s location based off the set of observed access points and their received signal strengths and then reporting that result to the user, Apple’s API will return return the geolocations of up to 400 hundred more BSSIDs that are nearby the one requested. It then uses approximately eight of those BSSIDs to work out the user’s location based on known landmarks.

In essence, Google’s WPS computes the user’s location and shares it with the device. Apple’s WPS gives its devices a large enough amount of data about the location of known access points in the area that the devices can do that estimation on their own.

That’s according to two researchers at the University of Maryland, who said they theorized they could use the verbosity of Apple’s API to map the movement of individual devices into and out of virtually any defined area of the world. The UMD pair said they spent a month early in their research continuously querying the API, asking it for the location of more than a billion BSSIDs generated at random.

They learned that while only about three million of those randomly generated BSSIDs were known to Apple’s Wi-Fi geolocation API, Apple also returned an additional 488 million BSSID locations already stored in its WPS from other lookups.

UMD Associate Professor David Levin and Ph.D student Erik Rye found they could mostly avoid requesting unallocated BSSIDs by consulting the list of BSSID ranges assigned to specific device manufacturers. That list is maintained by the Institute of Electrical and Electronics Engineers (IEEE), which is also sponsoring the privacy and security conference where Rye is slated to present the UMD research later today.

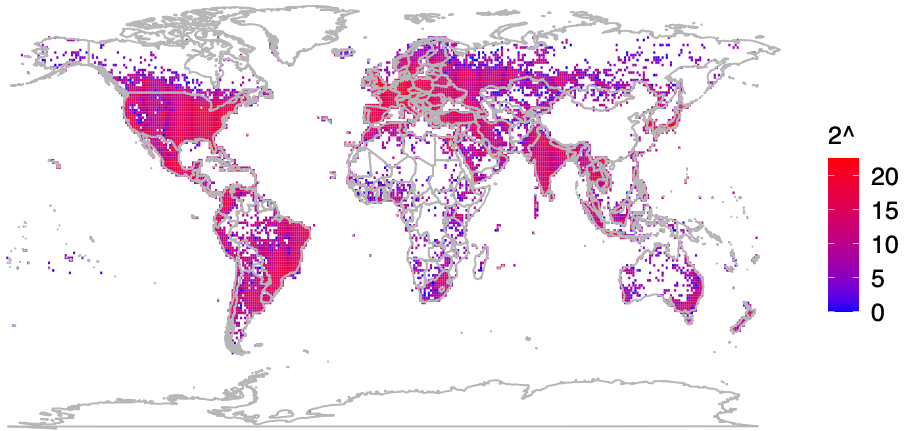

Plotting the locations returned by Apple’s WPS between November 2022 and November 2023, Levin and Rye saw they had a near global view of the locations tied to more than two billion Wi-Fi access points. The map showed geolocated access points in nearly every corner of the globe, apart from almost the entirety of China, vast stretches of desert wilderness in central Australia and Africa, and deep in the rainforests of South America.

The researchers said that by zeroing in on or “geofencing” other smaller regions indexed by Apple’s location API, they could monitor how Wi-Fi access points moved over time. Why might that be a big deal? They found that by geofencing active conflict zones in Ukraine, they were able to determine the location and movement of Starlink devices used by both Ukrainian and Russian forces.

The reason they were able to do that is that each Starlink terminal — the dish and associated hardware that allows a Starlink customer to receive Internet service from a constellation of orbiting Starlink satellites — includes its own Wi-Fi access point, whose location is going to be automatically indexed by any nearby Apple devices that have location services enabled.

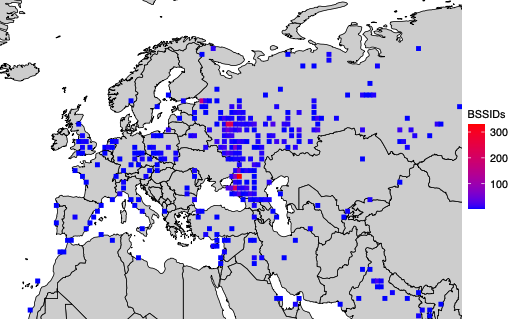

The University of Maryland team geo-fenced various conflict zones in Ukraine, and identified at least 3,722 Starlink terminals geolocated in Ukraine.

“We find what appear to be personal devices being brought by military personnel into war zones, exposing pre-deployment sites and military positions,” the researchers wrote. “Our results also show individuals who have left Ukraine to a wide range of countries, validating public reports of where Ukrainian refugees have resettled.”

In an interview with KrebsOnSecurity, the UMD team said they found that in addition to exposing Russian troop pre-deployment sites, the location data made it easy to see where devices in contested regions originated from.

“This includes residential addresses throughout the world,” Levin said. “We even believe we can identify people who have joined the Ukraine Foreign Legion.”

A simplified map of where BSSIDs that enter the Donbas and Crimea regions of Ukraine originate. Image: UMD.

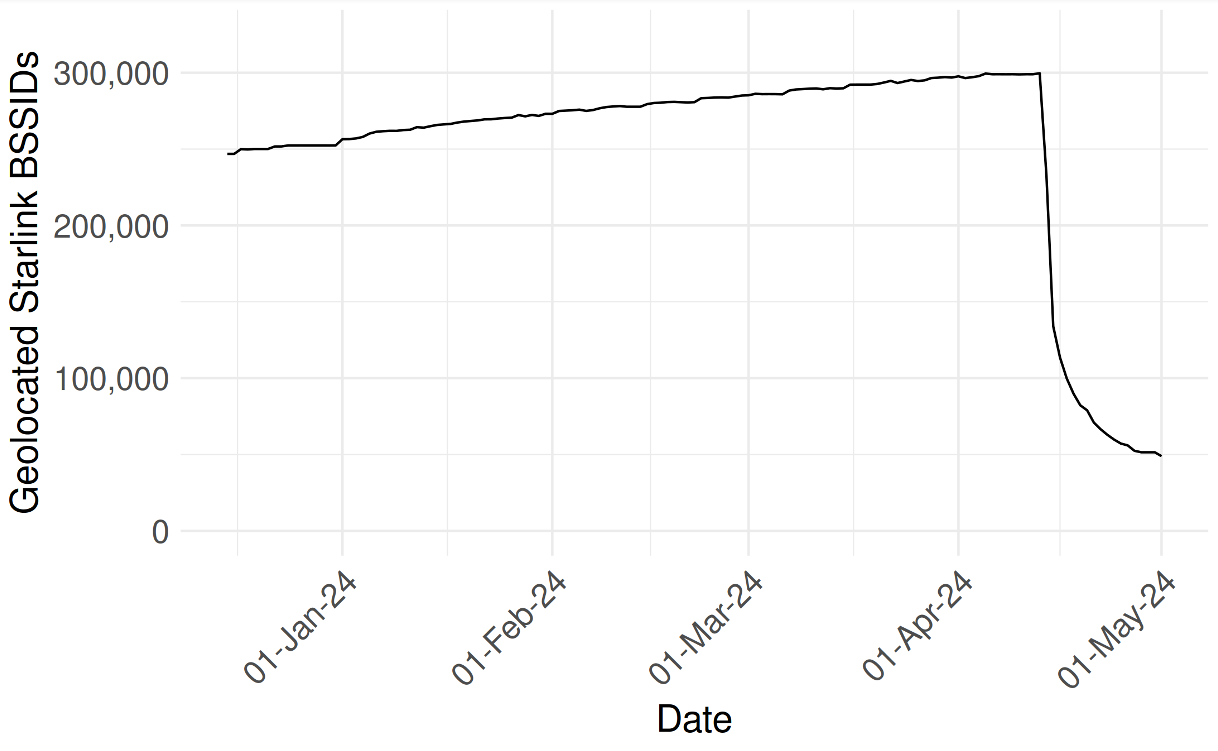

Levin and Rye said they shared their findings with Starlink in March 2024, which said it began shipping software updates in 2023 that force Starlink access points to randomize their BSSIDs.

Starlink’s parent SpaceX did not respond to requests for comment. But the researchers shared a graphic they said was created from their Starlink BSSID monitoring data, which shows that just in the past month there was a substantial drop in the number of Starlink devices that were geo-locatable using Apple’s API.

UMD researchers shared this graphic, which shows their ability to monitor the location and movement of Starlink devices by BSSID dropped precipitously in the past month.

They also shared a written statement they received from Starlink, which acknowledged that Starlink User Terminal routers originally used a static BSSID/MAC:

“In early 2023 a software update was released that randomized the main router BSSID. Subsequent software releases have included randomization of the BSSID of WiFi repeaters associated with the main router. Software updates that include the repeater randomization functionality are currently being deployed fleet-wide on a region-by-region basis. We believe the data outlined in your paper is based on Starlink main routers and or repeaters that were queried prior to receiving these randomization updates.”

The researchers also focused their geofencing on the Israel-Hamas war in Gaza, and were able to track the migration and disappearance of devices throughout the Gaza Strip as Israeli forces cut power to the country and bombing campaigns knocked out key infrastructure.

“As time progressed, the number of Gazan BSSIDs that are geolocatable continued to decline,” they wrote. “By the end of the month, only 28% of the original BSSIDs were still found in the Apple WPS.”

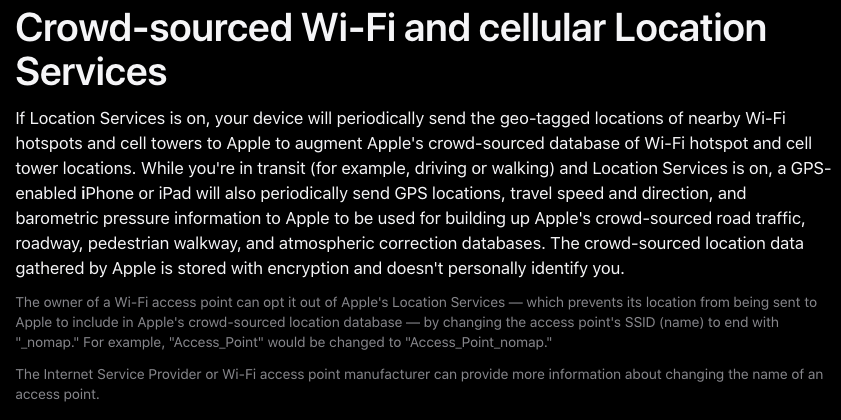

Apple did not respond to requests for comment. But in late March 2024, Apple quietly tweaked its privacy policy, allowing people to opt out of having the location of their wireless access points collected and shared by Apple — by appending “_nomap” to the end of the Wi-Fi access point’s name (SSID).

Apple updated its privacy and location services policy in March 2024 to allow people to opt out of having their Wi-Fi access point indexed by its service, by appending “_nomap” to the network’s name.

Rye said Apple’s response addressed the most depressing aspect of their research: That there was previously no way for anyone to opt out of this data collection.

“You may not have Apple products, but if you have an access point and someone near you owns an Apple device, your BSSID will be in [Apple’s] database,” he said. “What’s important to note here is that every access point is being tracked, without opting in, whether they run an Apple device or not. Only after we disclosed this to Apple have they added the ability for people to opt out.”

The researchers said they hope Apple will consider additional safeguards, such as proactive ways to limit abuses of its location API.

“It’s a good first step,” Levin said of Apple’s privacy update in March. “But this data represents a really serious privacy vulnerability. I would hope Apple would put further restrictions on the use of its API, like rate-limiting these queries to keep people from accumulating massive amounts of data like we did.”

The UMD researchers said they omitted certain details from their research to protect the users they were able to track, noting that the methods they used could present risks for those fleeing abusive relationships or stalkers.

“We observe routers move between cities and countries, potentially representing their owner’s relocation or a business transaction between an old and new owner,” they wrote. “While there is not necessarily a 1-to-1 relationship between Wi-Fi routers and users, home routers typically only have several. If these users are vulnerable populations, such as those fleeing intimate partner violence or a stalker, their router simply being online can disclose their new location.”

The researchers said Wi-Fi access points that can be created using a mobile device’s built-in cellular modem do not create a location privacy risk for their users because mobile phone hotspots will choose a random BSSID when activated.

“Modern Android and iOS devices will choose a random BSSID when you go into hotspot mode,” he said. “Hotspots are already implementing the strongest recommendations for privacy protections. It’s other types of devices that don’t do that.”

For example, they discovered that certain commonly used travel routers compound the potential privacy risks.

“Because travel routers are frequently used on campers or boats, we see a significant number of them move between campgrounds, RV parks, and marinas,” the UMD duo wrote. “They are used by vacationers who move between residential dwellings and hotels. We have evidence of their use by military members as they deploy from their homes and bases to war zones.”

A copy of the UMD research is available here (PDF).

From Slashdot:

Apple and Google have launched a new industry standard called “Detecting Unwanted Location Trackers” to combat the misuse of Bluetooth trackers for stalking. Starting Monday, iPhone and Android users will receive alerts when an unknown Bluetooth device is detected moving with them. The move comes after numerous cases of trackers like Apple’s AirTags being used for malicious purposes.

Several Bluetooth tag companies have committed to making their future products compatible with the new standard. Apple and Google said they will continue collaborating with the Internet Engineering Task Force to further develop this technology and address the issue of unwanted tracking.

This seems like a good idea, but I worry about false alarms. If I am walking with a friend, will it alert if they have a Bluetooth tracking device in their pocket?

Apple has unveiled significant security enhancements with the introduction of iOS 17.5, addressing nearly 15 vulnerabilities. Among the key features is a capability to thwart Bluetooth-based iPhone tracking, a move aimed at bolstering user privacy.

The latest iOS update, version 17.5, includes an alert system to notify users of potential cross-platform tracking attempts. This feature serves as a safeguard against unauthorized surveillance of iPhones via Bluetooth signals. Additionally, enhancements to the AirTag System provide added security measures, assisting users in locating misplaced items like car keys while safeguarding against potential privacy breaches.

Apple has also prioritized the resolution of malware concerns, particularly those exploiting the Find My app to track user locations and transmit data to criminal servers. Furthermore, updates have been implemented to fortify the security of Apple Maps navigation software, thwarting attempts by hackers to exploit vulnerabilities and compromise user data.

These proactive measures underscore Apple’s commitment to ensuring the privacy and security of its users. Regular updates are integral to mitigating potential threats and maintaining a secure user experience.

Looking ahead, iOS 17.5 may mark the culmination of Apple’s ongoing efforts in this regard, as attention shifts towards the forthcoming iOS 18 release. Anticipated to debut with AI-powered features, iOS 18 is expected to be unveiled at the Worldwide Developers Conference (WWDC) scheduled for June of this year.

The post New Apple iOS security update blocks Bluetooth Spying appeared first on Cybersecurity Insiders.