[By Gabi Reish, Chief Business Development and Product Officer, Cybersixgill]

In today’s rapidly expanding digital landscape, cybersecurity teams face ever-growing, increasingly sophisticated threats and vulnerabilities. They valiantly try to fight back with advanced threat intelligence, detection, and prevention tools. But many security leaders admit they’re not sure their actions are effective.

In a recent survey1, 79 percent of respondents said they make decisions without insights into their adversaries’ actions and intent, and 84 percent of them worry they’re making decisions without an understanding of their organization’s vulnerabilities and risk.

What’s causing this uncertainty? The skills shortage is certainly one factor. There’s no getting away from this long-standing reality. According to a 2022 report2, some 3.4 million security jobs are unfilled due to a lack of qualified applicants. But there’s far more to the story than a staffing shortage.

The Cyber Threat Intelligence Paradox

Cyber threat intelligence (CTI) attempts to understand adversaries and their potential actions before they occur and prepare accordingly. CTI gathers information about threat actors, their intentions, mechanisms, intended targets and means for doing so as comprehensively as possible.

The reason why cybersecurity teams lack confidence in their actions is due to what I term The CTI Paradox: The more you have, the less you know. These teams are flooded with information that they can’t easily act upon because they can’t distinguish what’s relevant to their organization and what’s not. Additionally, they often have an overabundance of security tools designed to detect vulnerabilities, threats, intrusions and the like – firewalls, access management, endpoint protection, SIEM, SOAR, XDR, etc. – that they can’t operate them efficiently without a clear set of priorities.

To illustrate the point, my company, Cybersixgill, recently conducted a survey of more than 100 CTI practitioners and managers from around the globe. We learned that almost half the respondents said that they are still challenged, even with CTI tools at their disposal. Among the issues are the overwhelming volumes and irrelevance of data, the difficulty of gaining access to useful sources, and the complexity of integrating intelligence from different solutions.

It’s no surprise then that 82 percent of surveyed security professionals3 view their CTI program as an academic exercise. They buy a product but have no strategy or plan for using it.

While this scenario may sound grim, there are options to help CISOs and their teams make effective use of CTI data and strengthen their cyber defense. Here are some suggestions for getting out of the CTI Paradox and gaining confidence that your organization is foiling cyberattackers effectively and efficiently.

The Four Pillars of Effective CTI

Fundamentally, a well-functioning security department needs two things: Timely, accurate insights about threats that are relevant to their organization, and the capacity to quickly respond to those threats. The first order of business is devising an overall strategy that reflects the organization’s unique security concerns. Next you need effective CTI that recognizes those concerns. And finally, you need the detection and prevention tools that allow you to take action in response to the relevant insights.

More specifically, resolving the CTI paradox means using CTI tools that provide support through four pillars:

- Data – information about cyberthreats that matter to the organization

- Skill sets – tools that match the team’s level of expertise in responding to those threats

- Use cases – tools that match the types of intelligence that the security team is interested in

- Compatibility – the fit between a CTI solution and the rest of the security stack

Let’s look at the four pillars, how and why organizations may be experiencing problems, and the best ways to solve them.

Data

Problem: It’s one thing to collect massive amounts of data. It’s another thing to refine that data so that security teams know what is relevant and what is peripheral. While it is fine to be aware of security threats on a global level – both literally and figuratively – companies need to zero in on the threats and vulnerabilities most relevant to their attack surface and prioritize them accordingly.

Solution: Focus on products that analyze and curate information rather than dumping everything on users and expecting them to filter out what is relevant and what’s noise.

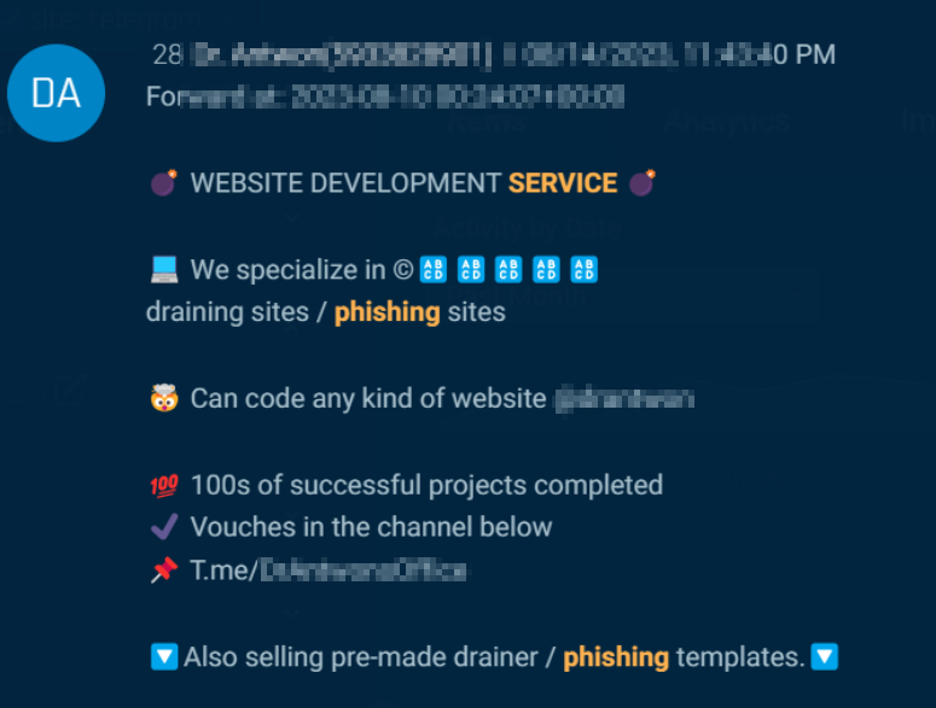

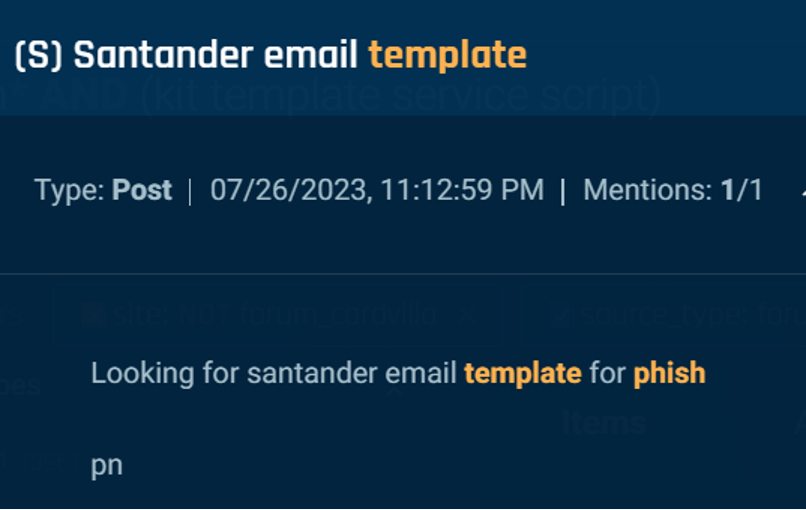

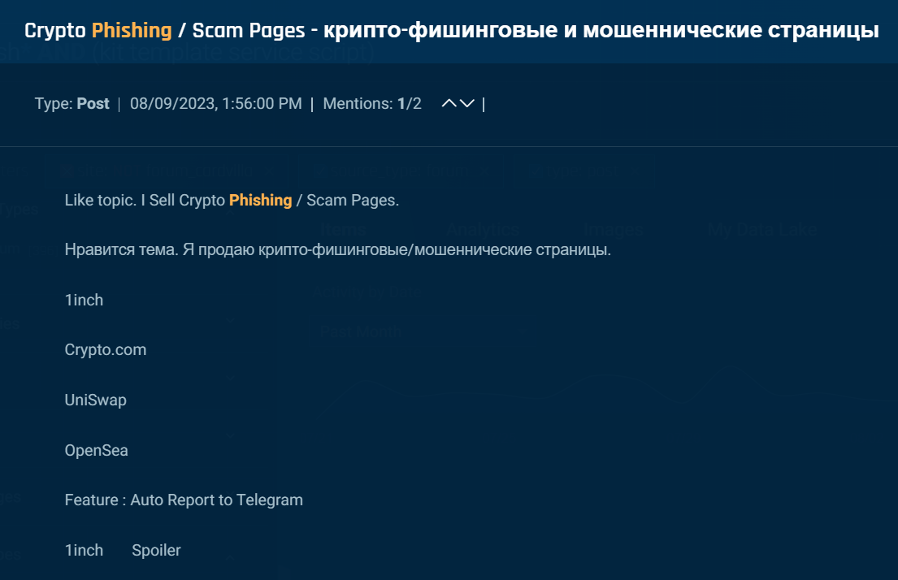

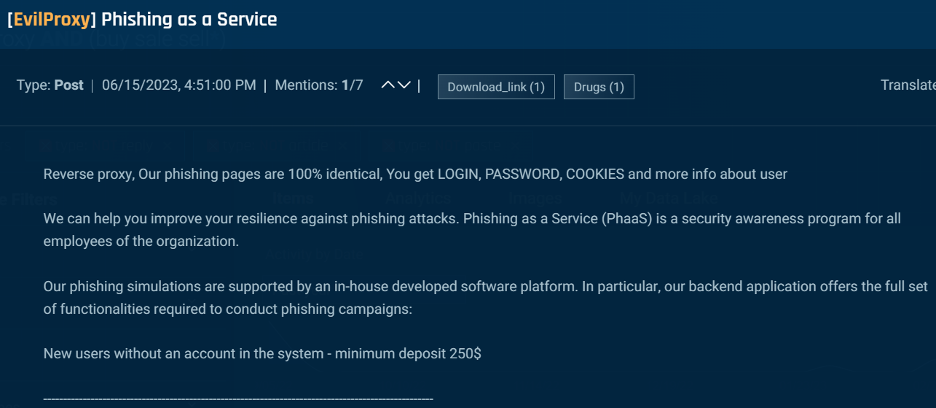

If you’re shopping for a solution, be sure that the vendor has first compiled an exhaustive list of potential threats by accessing a wide range of sources, including underground forums and marketplaces and that the information is continuously updated in real time. But the vendor should further allow you to cull down the list to a manageable level, using the tool to automatically contextualize and prioritize those threats and thus respond quickly and efficiently.

Skill sets

Problem: Security teams sometimes find themselves working with tools that do not match their cybersecurity skills. A tool that provides access to raw, highly detailed information may be too complex for a more junior practitioner. Another tool may be too simplistic for a security team operating at an advanced level and fail to provide sufficient information for an adequate response.

Solution: Teams need to use CTI tools that match or complement their skill sets. You also want to select tools that match your organization’s security maturity and appetite for data – neither too high nor too low for your needs. Ideally, the tool you use incorporates generative AI geared specifically to threat intelligence data.

Use cases

Problem: Organizations may receive information irrelevant to their primary use cases. CTI vendors typically address a dozen or more intelligence use cases such as brand protection, third-party monitoring, phishing, geopolitical issues, and more. Receiving intelligence to address a use case irrelevant to your organization’s security concerns isn’t helpful.

Solution: Find a solution that matches your use-case needs and provides information that is clear, relevant, and specific to those use cases. For example, if your organization is particularly subject to ransomware, find one that offers the best, most up-to-date information about ransomware threats.

Compatibility

Problem: To adequately handle cyber threat intelligence, an organization needs to be able to consume incoming data, integrate it with other elements of its security stack (SIEM, SOAR, XDR, and whatever other tools that are useful for the organization), and take action rapidly. Without this compatibility among tools, organizations may not be able to mitigate threats quickly enough. Additionally, manually porting information from one area to another may become onerous enough that the CTI tool eventually is ignored.

Solution: In this environment, you need to rely on automated responses to threats as much as possible, so make sure whatever CTI tool you acquire integrates seamlessly with your security ecosystem. You’ll want a tool that has the APIs needed to share information readily with the rest of your security stack. Check the vendor’s compatibility list to be certain that the CTI tool will sync with the security tools most important to your organization.

The CTI Paradox does not have to go unsolved. Curated, contextualized threat intelligence, relevant to an organization’s use cases, eliminates the paralysis that comes from too much data. Well-integrated tools, appropriate for the security teams implementing them, give organizations the defense mechanisms required to detect and respond rapidly and efficiently.

By being smart about threat intelligence and your organizational status and requirements, you can move from doubt and uncertainty to clarity, focus, and effective direction.

Gabi Reish, the chief business development and product officer of Cybersixgill, has more than 20 years of experience in IT/networking industries, including product management and product/solution marketing.

The post The Cyber Threat Intelligence Paradox – Why too much data can be detrimental and what to do about it appeared first on Cybersecurity Insiders.