Abstract:

SASE (Secure Access Service Edge) is a comprehensive solution that aims to improve the security of an organization's network by providing centralized and cloud-based security services. This solution streamlines access to resources and enhances the security of the network edge. SASE is important because it helps organizations cope with the challenges posed by the increasing complexity of a cloud-forward system that relies on a distributed workforce and SaaS services. The future of cloud security lies in SASE, which promises to provide organizations with a comprehensive and secure solution to manage their network security needs.

What is SASE?

Secure Access Service Edge (SASE) is a cutting-edge technology designed to provide enhanced network security, performance, and simplicity to organizations. The term was first introduced by the well-known research and advisory company, Gartner. SASE aims to address the increasing need for secure and streamlined network access in the face of digital transformation, edge computing, and remote work. With SASE, organizations can benefit from a flexible and scalable network that connects employees and offices across the world, regardless of their location and device. This way, organizations can keep pace with the changing needs of their digital business operations.

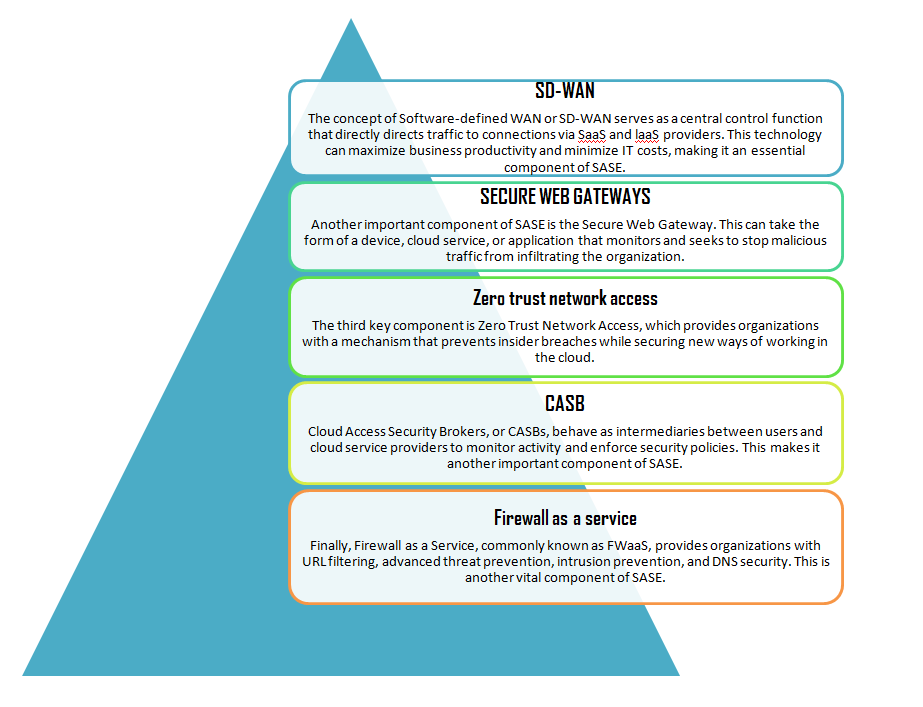

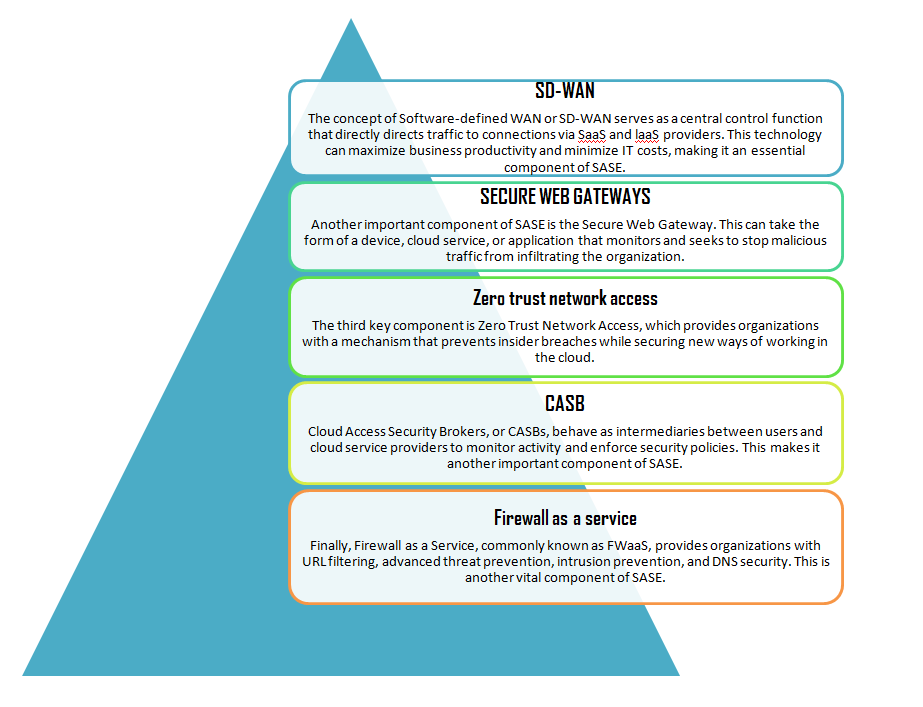

SASE merges Wide Area Network (WAN) capabilities and cloud-based network security services, including zero-trust network access, secure web gateways, cloud access security brokers, and firewalls-as-a-service (FWaaS). This combination offers organizations a single service provider solution to their network and security needs, reducing the number of vendors and streamlining the network access process.

What is the importance of SASE?

As companies continue to transition to cloud-based systems, they face the challenge of enhancing security while also simplifying their operations and reducing costs. The move to remote work has only intensified the demand for hybrid cloud solutions and software as a service (SaaS), making these requirements even more pressing.

One technology that has garnered significant attention in the enterprise security space is Secure Access Service Edge (SASE). This architecture offers an attractive solution by combining network components like VPN and SD-WAN with security features like Zero Trust and contextual access.

While SASE is still largely a concept or goal for most organizations, leading tech vendors such as Cisco, Zscaler, Akamai, Palo Alto Networks, and McAfee have adopted the idea and are promoting components of their products as essential for realizing SASE. Despite its allure, companies need to overcome various obstacles to make SASE a reality. The concept may sound simple in theory, but implementing it in practice requires careful planning and execution.

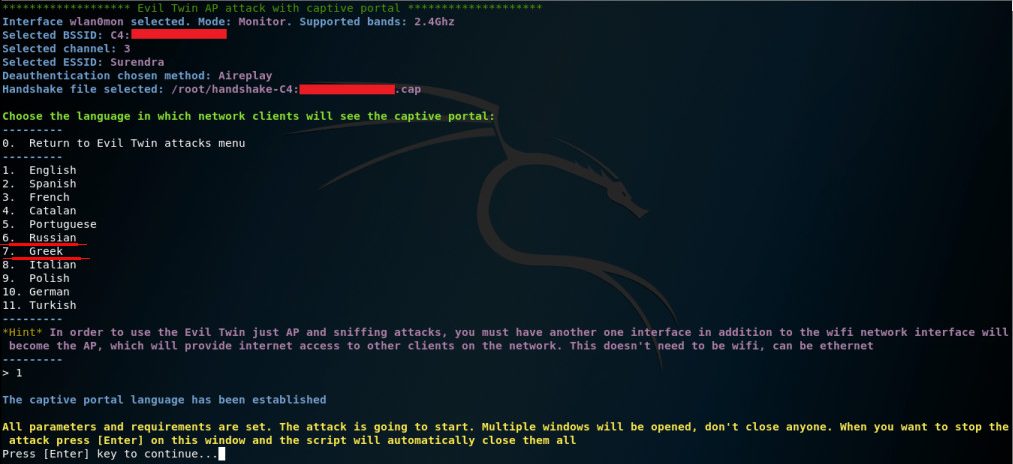

The core security components of SASE

- Cloud Access Security Broker (CASB)

CASBs are responsible for performing several security functions for cloud-based services, including detecting shadow IT (unauthorized corporate systems), securing confidential data through access control, and ensuring compliance with data privacy regulations.

- Secure Web Gateways (SWG)

SWGs play a crucial role in protecting against cyber threats and preventing data breaches by filtering out unwanted content from web traffic, blocking unauthorized user activity, and enforcing an organization's security policies. These gateways are particularly suitable for providing security for remote workforces as they can be deployed in a distributed environment.

- Firewall-as-a-Service (FWaaS)

FWaaS refers to firewalls that are delivered as a service from the cloud, protecting cloud-based platforms, infrastructure, and applications against cyber-attacks. This service provides a set of security capabilities, including URL filtering, intrusion prevention, and uniform policy management across all network traffic.

- Zero Trust Network Access (ZTNA)

ZTNA platforms provide enhanced security to protect against potential data breaches by locking down all internal resources, applications, and devices from public view. They require real-time verification of every user, ensuring that only authorized users have access to sensitive information.

The best reasons to switch to SASE

Secure Access Service Edge (SASE) is the future of network security and is rapidly gaining popularity among organizations. SASE is a cloud-based architecture that combines Virtual Private Network (VPN) and Software-Defined Wide Area Network (SD-WAN) functions with cloud security services such as firewalls, secure web gateways, cloud access security brokers, DNS security, data loss prevention, and zero trust network access. It provides a centralized and simplified security architecture for all users and traffic by routing it through a single on-premises access point. The traditional VPN approach is becoming less effective with the growing number of remote locations and cloud services. SASE addresses these challenges by offering secure data exchange without relying on a central hub with security functions. This is achieved through a unified policy management based on user identities and flexible transport routes.

Here are five compelling reasons why organizations should consider switching to SASE:

- Transparency: SASE provides a comprehensive overview and transparent reporting, allowing organizations to detect and respond to cyber threats more efficiently.

- Secure network access: SASE provides secure network access from anywhere and on any device through end-to-end encryption and reliable protection on public networks.

- Resource savings: SASE eliminates the need for network operations, reducing effort and costs.

- Security policies: SASE enables organizations to define corporate policies centrally and is compatible with the Zero Trust concept. It checks devices for trustworthiness and user identities to block unauthorized access.

- Improved performance: Outsourcing to the cloud and simplifying the architecture can significantly increase performance as it eliminates traffic redirection to a central computing system and reduces latency.

The Adoption of Secure Access Service Edge (SASE)

Gartner, a global research and advisory firm, has predicted that SASE will be widely adopted by 2023 with a 20% rate of adoption. The firm believes that the demand for SASE capabilities will have a major impact on the enterprise network and security architecture, and change the competitive landscape in the industry.

In the coming years, the trend toward SASE adoption is expected to pick up the pace and become more pressing. Another research conducted by Palo Alto Networks and Gartner has outlined the following predictions for the future of SASE:

By 2025, 80% of enterprises are expected to have adopted a strategy to unify their web, cloud services, and private application access through a SASE/SSE architecture, compared to the 20% in 2021. By 2025, 65% of enterprises are expected to have consolidated their SASE components into one or two specifically partnered SASE vendors, up from 15% in 2021. By 2025, it is predicted that 50% of SD-WAN purchases will be part of a single vendor SASE offering, up from less than 10% in 2021.

Challenges of SASE Deployment

The implementation of SASE, or the Secure Access Service Edge, brings with it several challenges. SASE involves the integration of security services with network services, making access to SaaS and multi-cloud functions secure. It includes features found in SD-WAN deployments, such as path resiliency and redundancy, app routing, visibility and reporting, vendor-specific software-defined capabilities, and VPN.

One of the prerequisites for SASE is a virtualized network. SD-WAN, which helps virtualize networks and their operations, has already been widely adopted, but it requires the replacement of older, single-function switches and routers with new equipment. Most enterprises have virtualized a significant portion of their networks, but the more challenging part of realizing SASE is the need for a security architecture that can be fully integrated and managed like software-defined networks.

The deployment of Secure Access Service Edge (SASE) technology faces numerous challenges, one of which is the need for a unified approach to secure access, threat protection, policy management, and device management. The challenge lies in the fact that many enterprises have a disparate range of security components in place, making it difficult to integrate them into a cohesive security architecture. Another obstacle to overcome is the lack of coordination between networking and security operations in many organizations. Typically, these two functions are managed by separate departments and may not have a close working relationship. For SASE to be successfully deployed, network operations and security operations must collaborate and align their efforts. Without this collaboration, it is impossible to implement SASE, making it a major challenge in the deployment process.

The majority of enterprises currently have a collection of security products in place, which are often standalone. Some organizations have dozens or even hundreds of unique security applications running on different platforms, from data centers and cloud instances to networks, hardware endpoints, and individual apps. Even the essential VPN for secure network connections may not be compatible with all the devices and servers within an organization, and the increasing number of network options (such as 5G, WiFi 6, and broadband) adds to the compatibility challenges.

How to Approach Adopting SASE?

- Data Protection: Ensure that there is consistency in policies and procedures for data protection both in transit and at rest. Implement access control, encryption, and segmentation of data to protect it.

- Data Distribution Model: Consider the entire data landscape and understand where the data will be stored, as it might be stored in multiple locations.

- Improving Efficiency: Look at current projects and determine if they need to be modified to accommodate cloud-hosted services in the next 2-4 years, backup services (whether local or cloud-based), and sensitive services.

- Data Flow and Migration: Evaluate the current data flow within the organization's on-premises deployment and make changes to ensure a smooth flow. Have a comprehensive plan to identify how the data will move across environments to maintain its integrity.

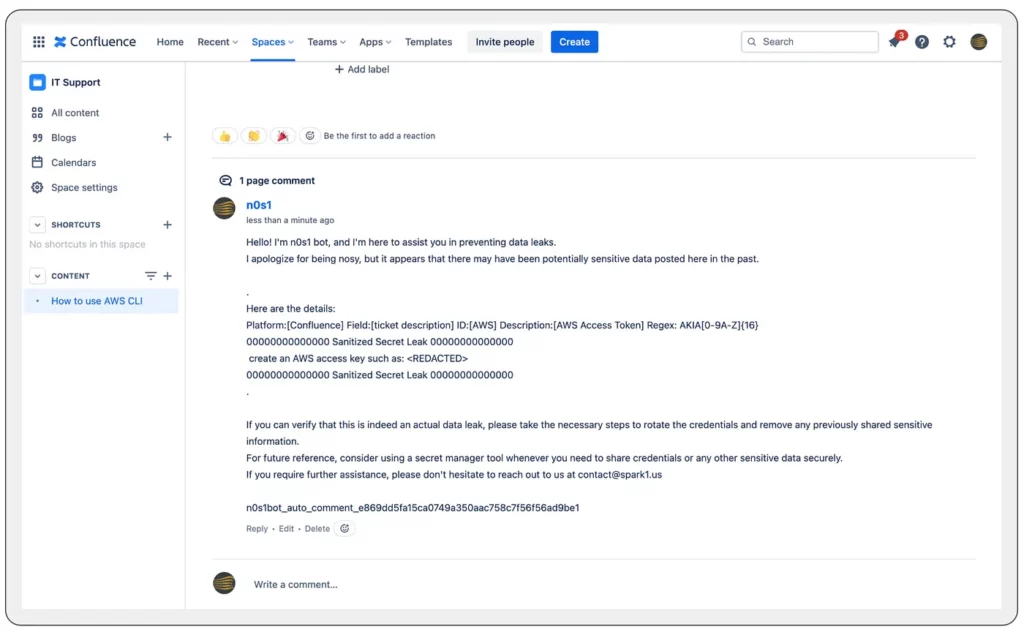

- Centralized Visibility and Policy Control: Have a transparent approach to documenting network users, the data they share, connections they access, access authorization, and policies for non-compliance. Focus on the entire network, not just the edge.

- Data Segmentation: Handle security incidents at the edge and protect sensitive data residing at the data center by implementing a fool-proof approach. Keep visibility throughout the environment, not just at the edge, to keep the data protected.

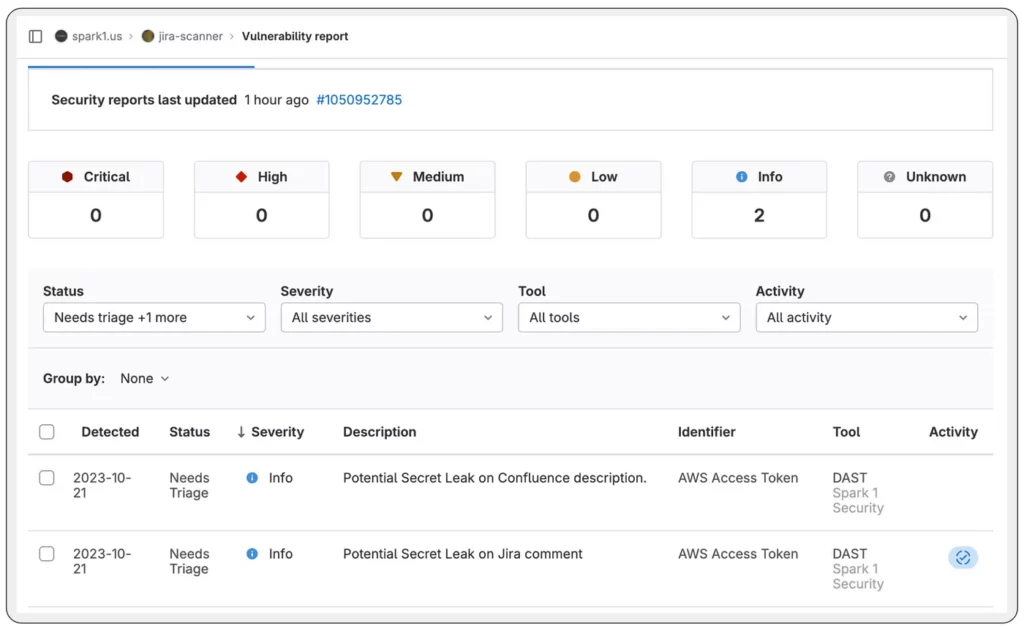

The Implementation of SASE Poses Significant Integration Challenges

Contrary to what some vendors may claim while marketing their products, the reality is that implementing SASE is a substantial integration challenge. Smaller organizations may lack the necessary resources to achieve this, even if they have some components in place such as SD-WAN and cloud access gateways.

Even larger organizations face difficulties in integrating their network and security tools and cloud management products, making sure that information can be shared and managed through a single interface. Although it is possible to implement SASE without a unified control plane or a single management console, this solution is more costly in terms of the required skills, manpower, and time compared to a unified management interface. Additionally, it increases the likelihood of issues arising due to a lack of visibility when non-compatible systems are manually managed. Some network operators, such as Verizon, offer "SASE as a Service" built on top of their connectivity and security service operations. However, enterprises will still need to manage the relationship and allocate resources to ensure that the latest network and security updates are fully implemented as their business needs and infrastructure change.

For many companies, the best approach to SASE is to engage a systems integrator that can not only integrate the necessary tools but also manage the day-to-day operations of the SASE architecture implementation. However, enterprises should be aware that SASE is a constantly evolving target, with few companies having all the components in place, and those that do likely face upgrades and infrastructure changes as capabilities mature. Furthermore, service providers often have their preferred partners, which may not be compatible with the vendor products that the enterprise currently uses. Despite this, this route may still be advantageous as these operators can leverage their influence over vendor apps and their experience in making SASE effective.

Core Capabilities of the SASE Framework

The SASE (Secure Access Service Edge) framework has several essential components to ensure the secure access of a remote workforce and prevent data breaches in a business. The COVID-19 pandemic has shifted the workplace landscape, with many employees still working remotely, making it crucial for companies to have the right tools to secure their networks.

- One of these tools is SD-WAN as a service, which enables secure access to cloud-based resources and applications. It creates a virtual high road for network traffic and distributes it across the WAN to ensure optimal performance.

- Zero Trust Network Access (ZTNA) is another core capability of SASE that plays an important role in securing the corporate network. It requires authentication from employees before granting access to the network. After verifying their identities, ZTNA enables access but restricts their movement within the network, ensuring that only authorized users are present in the corporate network.

- The Secure Web Gateway (SWG) and Firewall as a Service (FWaaS) are security components that monitor and scan for any unauthorized access or malware, while also distributing user-generated traffic across the cloud. On the other hand, the Cloud Access Security Broker (CASB) acts as a mediator between users and applications and monitors the data flow between them to ensure the security of confidential data and comply with data privacy regulations.

Top benefits of SASE in Cybersecurity

The traditional remote access applications are often not secure enough, lacking important security functions such as IPS, SWG, and NGFW. This leaves businesses vulnerable and in need of alternative solutions. However, this patchwork approach does not provide the level of visibility and security desired.

A holistic security approach that incorporates the SASE framework can address these security failures. SASE integrates unique security features into the underlying network, including anti-malware, firewalling, IPS, and URL filtering mechanisms. This way, all cloud, mobile, and website edges receive equal protection.

The SASE network includes several routing and security features, including zero-trust network access, RBI, malware protection, firewall as a service, DNS reputation, intrusion prevention and detection, secure web access, and cloud access security broker. With the right SASE structure, businesses can be protected against cyber-attacks and detect the source of malware.

In addition to providing comprehensive security features, SASE also offers cost savings. The traditional approach of provisioning, maintaining, sourcing, and monitoring multiple point solutions can drive up enterprise Opex and Capex. With SASE, businesses can eliminate the need to deal with multiple cloud solution vendors and instead get all the solutions from a single provider. This reduces the costs associated with network maintenance, upgrades, IT staffing, patches, and appliance buying. SASE also simplifies the network by eliminating the need for virtual and physical appliances, embracing a single cloud-native solution.

SASE is designed to meet the demands of real-time applications by providing high-performance network connections. Traditional VPN security methods have been hindered by security-related delays that impact application performance. To stay ahead of the competition, businesses need automated solutions that can secure connections quickly and efficiently. SASE provides these capabilities, enabling businesses to scale their network as needed.

- Simplified Network Management

SASE eliminates the difficulties of managing a complex network and its associated costs. Unlike traditional point solutions, SASE is a single cloud-based solution that offers easy management. In traditional networks, managing multiple devices such as NGFW, VPN, SWG, and SD-WAN in different office locations requires a large IT staff. As the number of offices increases, so does the need for additional personnel.

- Complete SD-WAN Integration

The adoption of Software-Defined Wide Area Network (SD-WAN) technology has revolutionized the way businesses connect to the cloud. With SASE, businesses can move away from traditional, proprietary WAN solutions and benefit from lower operational costs, increased flexibility, and improved performance. SD-WAN optimizes traffic flow by utilizing a centralized control plane, resulting in better application performance, reduced IT budgets, increased productivity, and a better user experience. SASE eliminates this problem by providing a single cloud-based solution that centralizes control and protection of the entire network. This simplifies management and reduces the need for large IT staff.

- Stable Data Protection for Your Business

Every day, businesses handle a large amount of important data, including sensitive customer information and confidential business data. Protecting this information is critical to avoiding data loss or exposure to malicious actors. The use of a SASE framework offers a solution to this challenge, with built-in automatic cloud data loss prevention (DLP). The SASE framework integrates DLP into key control points, ensuring that all forms of data are secure and protected. This eliminates the need for multiple protection tools, as the SASE DLP covers various cloud environments, devices, and applications.

SASE DLP provides businesses with the ability to enforce protection policies across their entire network, from a single central point. It also authenticates devices and users before granting access to the business applications and data, further enhancing the security of the network.

- Enhanced Network Performance

With the integration of SASE, businesses can experience improved network performance. The solution constantly tracks and monitors the flow of data, giving businesses a comprehensive view of how their data is being distributed across different data centers and cloud environments.

By monitoring both inbound and outbound processes, businesses can receive real-time information from a single network interface or portal. This eliminates the latency issues that were previously experienced with traditional network monitoring methods.

With SASE, businesses can enjoy fast and reliable network connections, regardless of the remote location of the portal user. This enhances the overall user experience and improves the efficiency of remote data access.

How to choose partners for SASE?

Choosing the right partner for your SASE solution is a crucial step in ensuring the security and efficiency of your cloud-based business processes. To ensure you make the right choice, here are some factors you should consider:

Ensure that the SASE solution offered by the potential partner is compatible with the cloud computing platform you use. For example, if you use Microsoft Azure, your SASE must be functional with it.

Ask the vendor to provide case studies from previous projects and consider reaching out to firms that have worked with them to gain insights into their partnership capabilities.

- International credentials and certificates

Check for certifications such as ISO 27001, 27002, and HIPAA to verify the partner's ability to handle sensitive data securely.

Compare prices offered by different vendors and make a decision based on your budget and needs.

Evaluate the provider's customer service policies, as different providers have different approaches. Consider the level of support you need based on your organization's IT capabilities.

Why SASE Is the Future of Remote Access?

The traditional networking model has been around for a while now, with applications and data residing in a central data center. This central location serves as the hub for users, workstations, and applications to access company resources, which is usually achieved through a local private network or a secondary network connected to the primary network through a VPN or other secure line. However, with the evolution of technology, this traditional approach has become increasingly insufficient in meeting the demands of a cloud-forward system that operates with a distributed workforce and utilizes SaaS services. It is no longer practical to direct all network traffic through a corporate data center when data and applications are hosted in a distributed cloud environment.

SASE (Secure Access Service Edge) offers a solution to this problem by implementing network controls at the cloud edge, instead of a unified data center. This approach streamlines security and network services, providing a more secure network edge without the need for a layered stack of cloud services with separate management and configuration requirements. Organizations can take advantage of SASE by implementing identity-based zero-trust access policies at the network edge. This expands the network's security perimeter to cover remote users, offices, devices, and applications, enhancing overall security. In conclusion, SASE is the future of remote access, providing organizations with a secure, flexible, and efficient solution to meet the demands of a rapidly changing technological landscape.

The implementation of SASE (Secure Access Service Edge) brings with it numerous benefits that make it a valuable solution for organizations looking to improve their network security. Some of these benefits include:

- Improved Performance: Service and application performance are optimized through a routing mechanism that minimizes latency and directs traffic through a high-performance SASE backbone. This is particularly important for latency-sensitive applications such as video and VoIP.

- Streamlining Access: SASE architectures provide consistent, secure, and fast access to all resources from any physical location, unlike data center-based access models, which can be slow and unreliable.

- Cost Optimization: The cloud model used by SASE is cost-efficient, allowing organizations to spread their costs across monthly fees, instead of making an upfront capital investment. It also allows businesses to consolidate their vendors and reduce the number of virtual and physical appliances and their associated costs, including purchasing and maintenance costs. Additionally, delegating the responsibilities of upgrades and maintenance to the SASE provider reduces costs even further.

- Simplifying the System: SASE's cloud-based security model and single-vendor WAN functions simplify the system compared to traditional multi-vendor approaches, which utilize different security appliances across different locations. The single-pass traffic inspection architecture of SASE helps to simplify the system further by decrypting and inspecting traffic streams in a single pass using different policy engines, instead of combining different inspection services.

- Enhanced Usability: SASE often reduces the number of agents and applications required by a device, replacing them with a single, user-friendly application. This ensures a consistent user experience regardless of the user's location or the resource being accessed.

Conclusion

In conclusion, SASE is a promising solution that provides organizations with the benefits of simplified systems, streamlined access, cost optimization, improved performance, and enhanced usability. The implementation of SASE requires careful planning and a clear understanding of an organization's current network security architecture, but the result is a more secure and efficient network. The future of cloud security is closely tied to SASE, which will continue to play an important role in protecting organizations from threats and helping them manage their network security needs. As the demand for cloud-based services continues to grow, organizations must take steps to adopt SASE and stay ahead of the curve in terms of network security.

References: